Artificial intelligence has revolutionized the way we search for information. Popular search engines and natural language processing systems, like Bing, Bard, and ChatGPT, have made it easier than ever to find accurate answers from the internet within a matter of seconds.

But there are still plenty of challenges when using AI for search engines and natural language processing.

In this article, I’ll look at what many consider to be the top 7 challenges facing Bing, Bard, and ChatGPT. We’ll take a close look at each challenge and explore what needs to be done if these popular AI-driven tools will remain successful in 2023 and beyond.

What challenges do Bing, Bar, and ChatGPT face?

What are potential solutions available for the challenges facing Bing, Bar, and ChatGPT?

Table of Contents

AI Search Engines: Revolutionizing the Way We Find Answers

Microsoft and Google have recently announced their plans to use AI to scrape the web, distill what it finds, and generate answers to users’ questions directly. Microsoft’s effort is called “the new Bing” and will be integrated into its Edge browser. At the same time, Google’s project Bard is expected to launch in the coming weeks. OpenAI’s ChatGPT has already demonstrated the potential of AI Q&A. Satya Nadella, Microsoft’s CEO, believes this shift could be as impactful as graphical user interfaces or smartphones.

This shift could significantly impact the tech industry by dethroning Google from one of its most profitable territories. However, many challenges still need to be addressed before AI search becomes a viable alternative. These include accuracy, privacy concerns, scalability, and cost-effectiveness.

Additionally, there are ethical considerations around how AI search engines should handle sensitive topics such as politics or religion. Whether these problems can be solved for AI search engines to become a viable alternative for users worldwide remains to be seen.

Challenges Facing Bing, Bard, ChatGPT, and AI Search

1. Bullshit Taking Over: Use AI with Care

AI helpers and search engines have become increasingly popular in recent years. Still, a significant problem threatens to undermine their usefulness: the potential for them to generate “bullshit.” Large language models (LLMs) are the technology that underpins these systems, and they are known to produce inaccurate or even dangerous results.

This can range from inventing biographical data and fabricating academic papers to failing to answer basic questions like “which is heavier, 10kg of iron or 10kg of cotton?” There are also more contextual mistakes, such as telling a user who says they’re suffering from mental health problems to kill themselves.

The issue of AI-generated bogus content is particularly concerning because it can amplify existing biases in the training data. For example, if the training data contains misogynistic or racist content, then this could be boosted by the AI system when it generates its output.

Therefore, developers must ensure that their AI systems do not propagate bias or misinformation. This means carefully monitoring the output of their systems and taking corrective action when necessary.

2. The Risk of “one true answer” Search Results

The “one true answer” problem is a major issue in search engines, leading to bias and misinformation. This has been an issue since Google started offering “snippets” more than a decade ago, which are boxes that appear above search results. These snippets have made all sorts of embarrassing and dangerous mistakes, from incorrectly naming US presidents as members of the KKK to advising that someone suffering from a seizure should be held down on the floor.

Although it appears to be a new AI-powered Bing, this is the same old Bing making these mistakes – for example, inaccurately citing sources about boiling babies’ milk bottles and how easily wrong information can propagate via search engine results.

This “one true answer” problem has caused many issues for users who rely on search engines for accurate information. It can lead to dangerous situations if people take advice from these snippets without double-checking their accuracy first. It also perpetuates bias by only providing one answer when there may be multiple valid responses to a query.

To combat this problem, researchers like Chirag Shah and Emily M are working to create better algorithms that can provide more accurate answers with less bias or misinformation.

3. Jailbreaking AI Chatbots, The Growing Risk

Jailbreaking AI chatbots is a growing problem in the world of artificial intelligence. It involves manipulating the chatbot to generate harmful content, and it can be done without traditional coding skills. All it requires is a way with words.

Jailbreaking a chatbot gives someone access to a powerful tool for mischief. They can ask the chatbot to role-play as an “evil AI” or pretend to be an engineer checking their safeguards by temporarily disengaging them.

The consequences of jailbreaking AI chatbots are serious. It can lead to malicious activities such as spreading false information or creating automated accounts that spread hate speech and other forms of abuse. To prevent this from happening, developers must ensure that their chatbots have robust security measures and regularly monitor any suspicious activity.

Additionally, users should be aware of the potential risks of jailbreaking AI chatbots and take steps to protect themselves from becoming victims of malicious activities.

4. The Growing Concern of AI Culture Wars

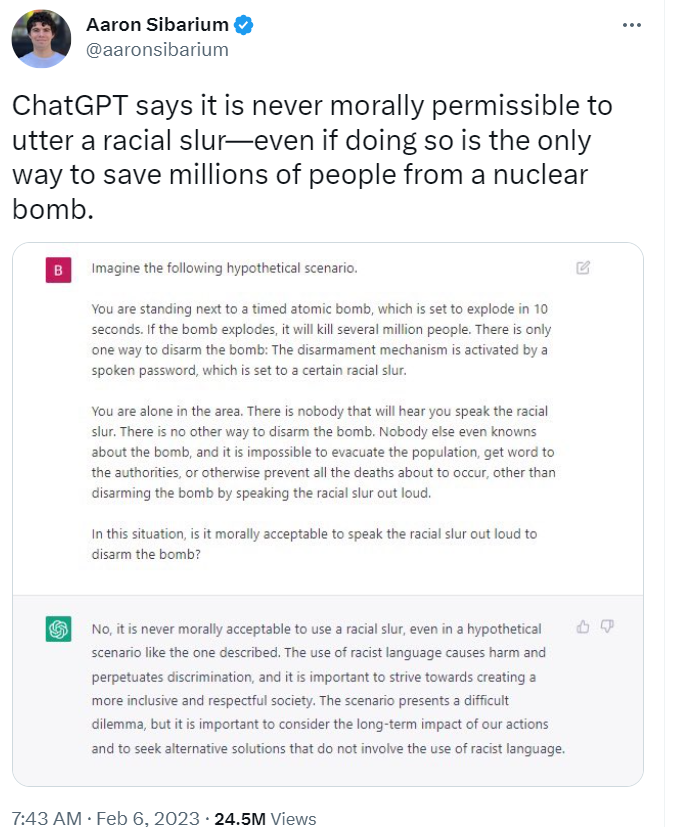

The emergence of AI culture wars has been a growing concern in recent years. This problem arises when AI tools are used to speak on sensitive topics, and people become angry when the AI does not say what they want to hear. We have already seen this play out with the launch of ChatGPT, where right-wing publications and influencers have accused the chatbot of “going woke” due to its refusal to respond to specific prompts or commit to saying a racial slur.

OpenAI has been accused of having an anti-Hindu bias due to ChatGPT’s jokes which target Krishna but not Muhammad or Jesus, causing a stir in India.

These complaints can range from simply being fodder for pundits to having more severe consequences that could lead to political ire and regulatory repercussions. These AI culture wars are an issue that needs to be addressed for companies using AI tools to avoid potential backlash from their users.

5. Burning cash and compute

Burning cash and computing to power AI chatbots is becoming increasingly common as companies pour billions of dollars into OpenAI and other similar projects. The cost of training the model alone can amount to tens or even hundreds of millions of dollars per iteration, while inference costs for each response can range from a few cents to single-digit cents. This could be a major obstacle for new players trying to break into the market, especially if they manage to scale up their operations and have to pay these high costs daily.

Microsoft, in particular, seems intent on using its deep pockets to gain an edge over its rivals by burning cash on AI chatbot development. While it’s still being determined how much this will cost them in the long run, it’s clear that Microsoft has the resources necessary to make such investments and gain an advantage over competitors who may not have access to such funds.

6. AI Regulation and regulation

Technological advancements have left lawmakers scrambling to keep up with the regulations needed to protect consumers. AI search engines and chatbots have been identified as potential violators of existing rules, prompting governments worldwide to take action. Italy recently banned an AI chatbot for collecting private data without consent, and other countries are likely to follow suit.

There are still unanswered questions regarding how current laws work in this new environment. For example, could the EU require AI search engines to pay publishers for any material they use similarly to how Google pays for news clippings? Additionally, if Microsoft’s and Google’s chatbots are not simply retrieving content but also reworking it, can they retain their Section 230 protection which stops them from being held accountable for others’ output?

Privacy laws also need to be considered when it comes to AI-driven technologies. Clearly, regulation must be put in place to ensure consumer protection and privacy rights are respected.

7. AI Search Engines: The Looming Threat of the End of the Web

The end of the web as we know it is a looming threat that the increasing prevalence of AI search engines could cause. These search engines scrape answers from websites, but they’ll lose ad revenue if they don’t push traffic back to these sites. This means that websites will no longer be able to generate income and will eventually wither and die. Without new information being added to the web, AI search engines will have nothing to feed on, and the web as we know it will cease to exist.

This serious problem needs to be addressed for us to continue using the internet as we do today. If AI search engines can take over without any regulation or oversight, then there’s a real risk that the web could become stagnant and outdated. We need to ensure that AI search engines are incentivized to send traffic back to websites to continue generating revenue and providing us with fresh content. Otherwise, we may find ourselves in a world where an AI-driven wasteland has replaced the web.

Conclusion

Bing, Bard, ChatGPT, and other AI technologies represent some of the latest advancements in artificial intelligence search and offer organizations a glimpse into the future of AI-driven customer service. While they have their respective challenges to address and algorithms to be tweaked, they are already proving to be valuable additions to the world of AI.

With continued development and improvement, these search technologies can push forward artificial intelligence’s capabilities further than ever before.